My first attempt at running Kubernetes at home: spoiler alert, it was painful

Join me on a candid journey through my initial, often frustrating, foray into setting up a Kubernetes cluster in my homelab. From battling networking woes to deciphering YAML, I share the painful lessons learned and the eventual triumph of getting it (mostly) working.

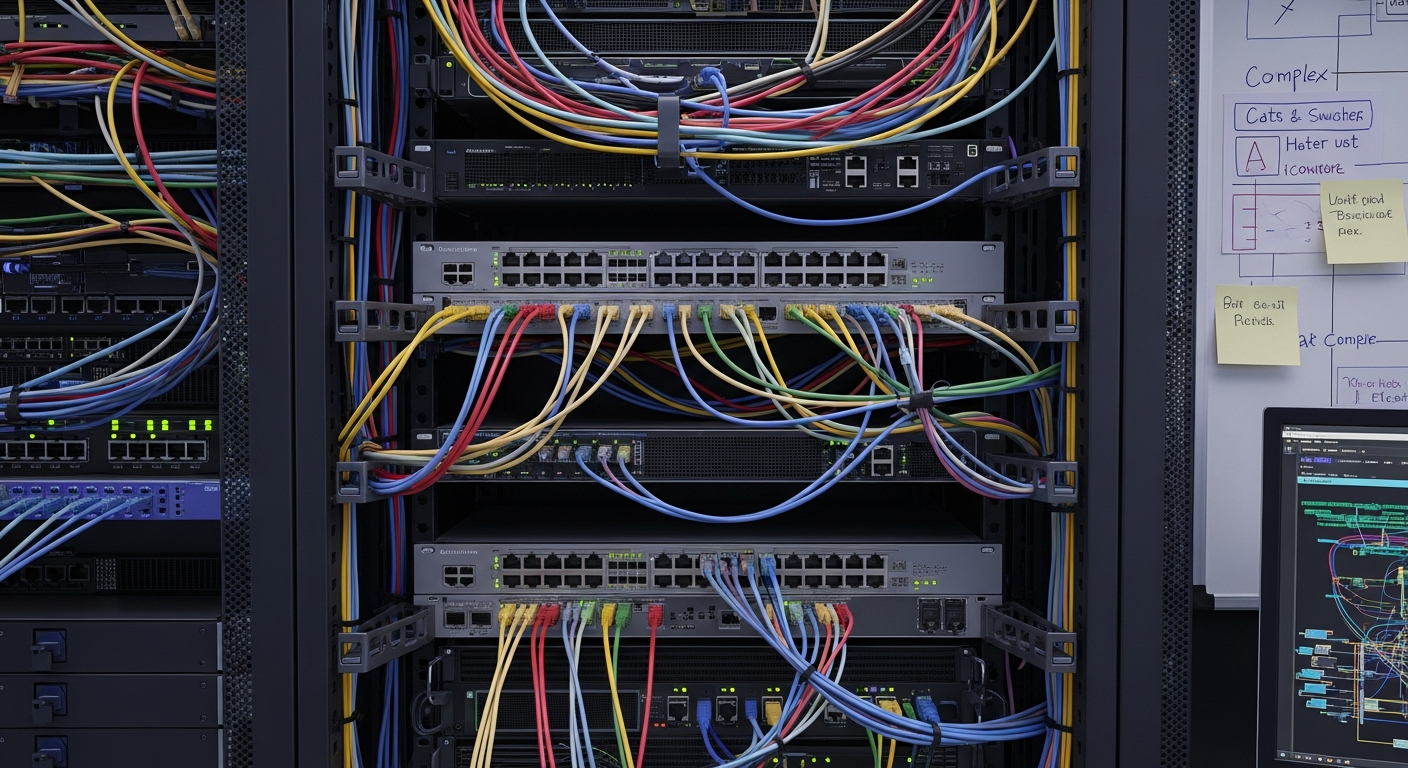

Hey everyone! You know that feeling when you dive headfirst into a new technology, full of excitement and grand visions, only to find yourself tangled in a web of documentation, error messages, and sheer confusion? Well, that was me, just a few months ago, deciding it was high time I brought Kubernetes into my humble homelab.

The Dream vs. The Reality

My goal was ambitious, perhaps overly so for a first-timer: set up a multi-node Kubernetes cluster from scratch, deploy a few services, and bask in the glory of container orchestration. I envisioned seamless deployments, self-healing applications, and a future where my homelab ran with enterprise-grade elegance. The reality? A lot of head-scratching, late nights, and an intimate relationship with kubectl describe pod.

The Setup: A Tale of VMs and Ubuntu

I started with three virtual machines on my Proxmox server: one for the control plane (master) and two worker nodes. All running Ubuntu Server 20.04. My plan was to use kubeadm, the official tool for bootstrapping Kubernetes clusters. Seemed straightforward enough, right? Install Docker, install kubeadm, kubelet, kubectl, and then kubeadm init. Oh, the sweet innocence!

Where the Pain Began: Networking, My Old Foe

As a homelab enthusiast, I thought I had a decent grip on networking. VLANs, firewalls, static IPs – bring it on! But Kubernetes networking? That's a whole different beast. My first major roadblock was the Container Network Interface (CNI). You need one for pods to communicate across nodes, and there are many choices: Calico, Flannel, Weave Net, etc.

Choosing a CNI: Flannel First, Then Calico

I initially went with Flannel because it seemed simpler. I applied the YAML manifest, and things looked okay. Pods were creating, but then... they couldn't talk to each other across nodes. Or worse, I couldn't access my deployed Nginx service from my host machine. I spent hours digging into ip tables, checking firewall rules (ufw on Ubuntu), and verifying my subnet configurations. The pod network CIDR, service CIDR, and how they interacted with my physical network were a constant source of confusion.

The Calico Switch and IP-in-IP Woes

After much frustration with Flannel, I decided to rip it out and try Calico, which promised more robust networking features, including network policies. While Calico offered more control, it also introduced new complexities. I encountered issues with its default IP-in-IP encapsulation mode conflicting with my VM network setup, leading to dropped packets and intermittent connectivity. Debugging network issues within Kubernetes is like peeling an onion – every layer reveals another mystery. I learned about:

• Pod CIDRs and Service CIDRs: Understanding how these internal IP ranges are allocated and routed.

• kube-proxy: The unsung hero (or villain, depending on your frustration level) that handles service load balancing and network proxying.

• Firewall Rules: Ensuring the necessary ports (like 6443 for the API server, 10250 for kubelet, etc.) were open on all nodes, and that the CNI could establish its routes.

• Network Policy: How to define rules for pod-to-pod communication, which is powerful but requires a solid understanding of your application's network needs.

YAML: My New Best Frenemy

Beyond networking, the sheer volume and complexity of Kubernetes YAML manifests were daunting. Every deployment, service, ingress, and persistent volume claim required a precise YAML file. One misplaced space or incorrect indentation could bring everything crashing down. I quickly became proficient in using a YAML linter and spending copious amounts of time on the Kubernetes documentation trying to understand every field.

Lessons Learned (The Hard Way)

Start Simple: Don't try to deploy a complex multi-tier application on day one. Start with a single Nginx deployment and a NodePort service. Read the Docs (Thoroughly): The Kubernetes documentation is vast but incredibly detailed. Focus on the networking concepts early on. Understand Your CNI: Don't just copy-paste the CNI manifest. Take the time to understand how your chosen CNI works, its requirements, and its configuration options. This was my biggest takeaway regarding the 'networking' category. Firewall Discipline: Be meticulous with firewall rules. Open only what's necessary, but ensure the core Kubernetes components and your CNI can communicate freely. Debugging Tools: Get comfortable with kubectl logs, kubectl describe, and using tools like tcpdump or netshoot (a containerized networking Swiss Army knife) within your pods. Patience is a Virtue: Kubernetes has a steep learning curve. Don't get discouraged by errors. Each one is an opportunity to learn.

The Light at the End of the Tunnel

After days (and what felt like weeks) of troubleshooting, re-initializing clusters, and countless cups of coffee, I finally got a stable, multi-node Kubernetes cluster running. I deployed a simple web application, exposed it via an Ingress controller, and felt a profound sense of accomplishment. It wasn't perfect, and I still have much to learn, but the foundation is there.

My first Kubernetes journey was undeniably painful, especially on the networking front. But it was also incredibly rewarding. I learned more about container networking, Linux system administration, and distributed systems than I ever thought I would. If you're thinking of diving into Kubernetes at home, be prepared for a challenge, but know that the learning experience is invaluable. Happy Kube-ing!